Hear What I Hear

Recently, research groups have shown that it is possible to read images from a person’s brain as they are seen using both fMRI and EEG through machine learning strategies. These strategies have however not yet been applied to auditory stimuli. It should theoretically also be possible to record imagined visual or auditory perceptions. This project aimed to utilize the same principles to extract simple musical details through EEG, and potentially even imagined music.

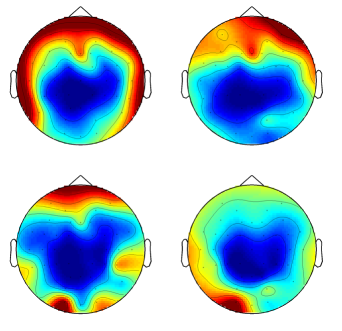

I had several volunteers wear a 64 channel EEG helmet and listen to several stimuli, before imagining that stimuli in their head separetly. With this dataset, we may eventually be able to find some common data that will allow us to predict the imagined sound in the head, by using machine learning or neural networks.

In its current status, only about 25% correct classification was achieved amongst 5 sounds. Not great, admittedly, but better than chance. There is potential.

Anyone interested in collaborating on this project should contact me.